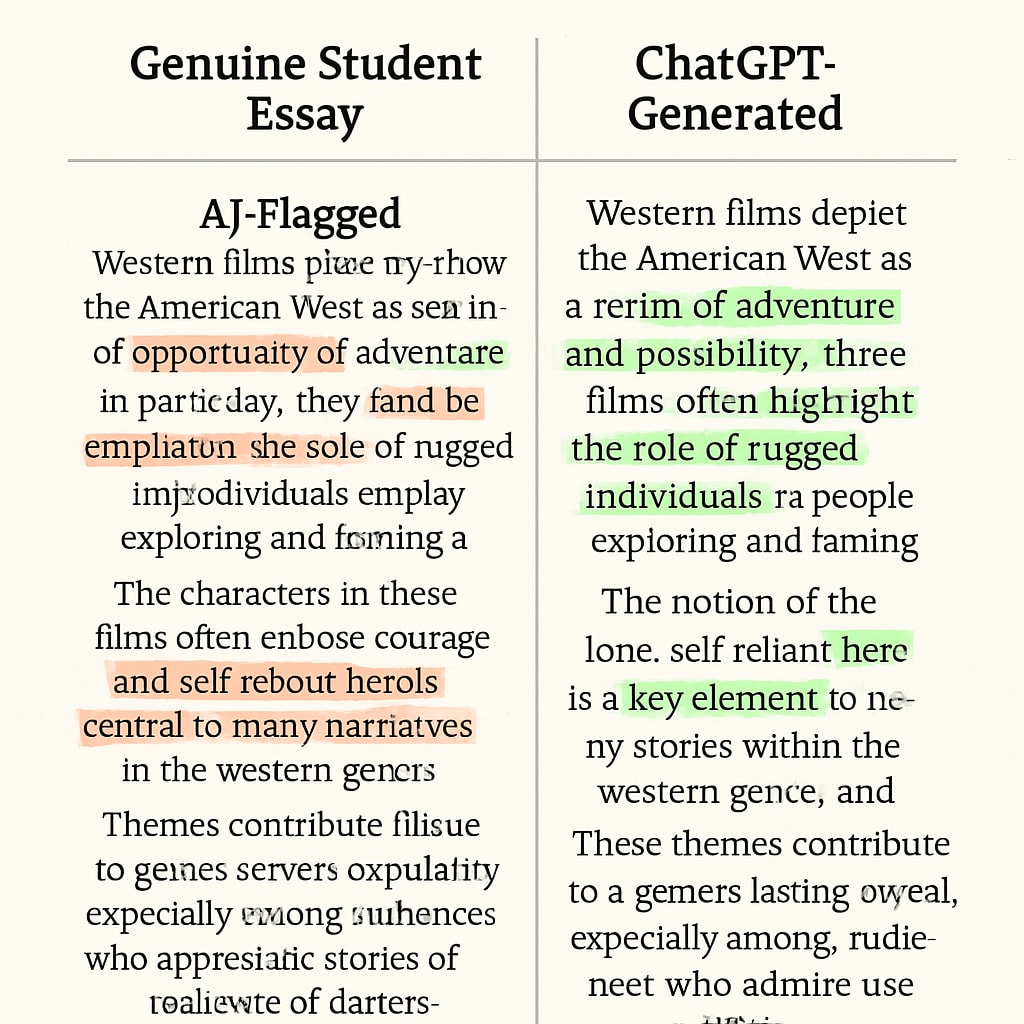

The rapid adoption of AI detectors, academic integrity, and false positives in educational institutions has created a new frontier of technological judgment calls. As universities increasingly rely on algorithms to screen student submissions, concerns grow about their accuracy and fairness. According to a 2023 study in Computers and Education, leading AI detection tools demonstrate error rates between 12-38% when analyzing authentic human writing.

The Science Behind AI Detection Failures

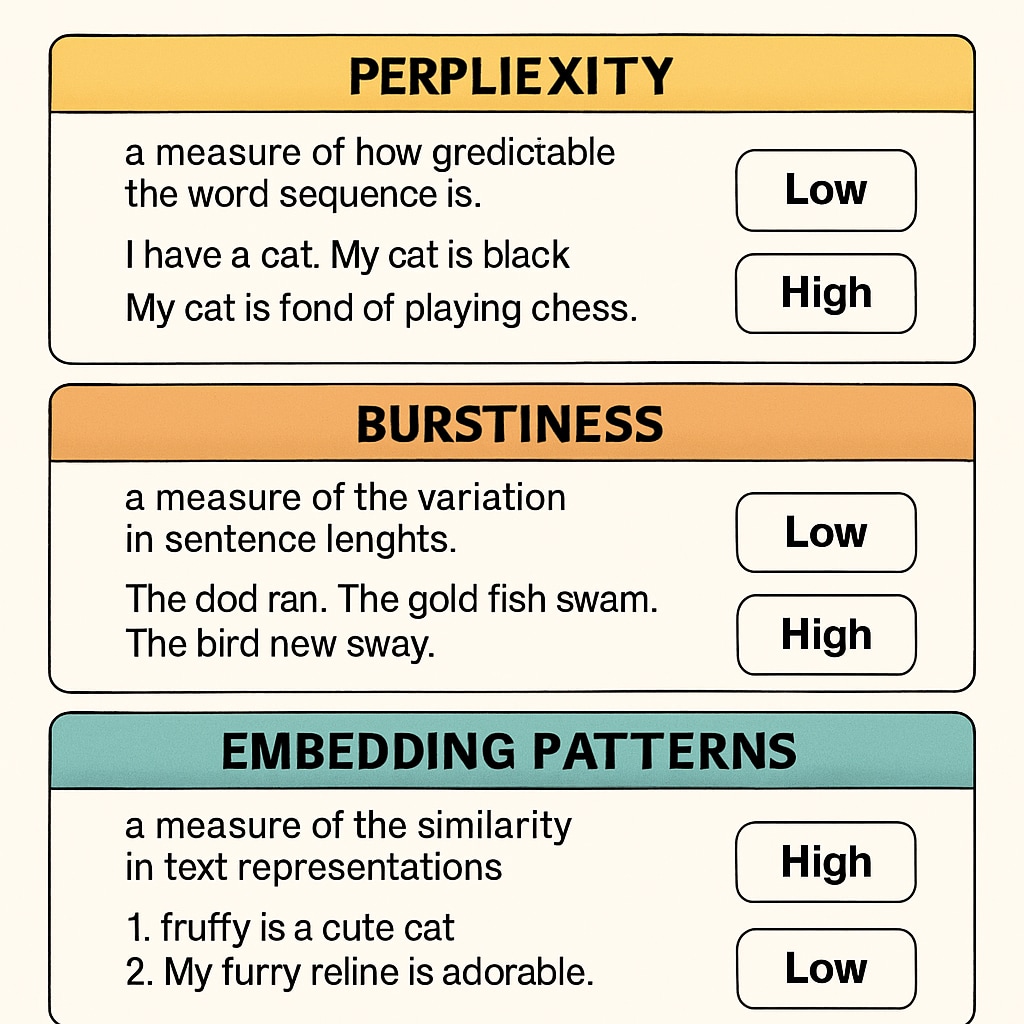

Current generation AI identification tools analyze three key characteristics:

- Perplexity: Measures textual complexity (often misclassifying concise academic writing)

- Burstiness: Evaluates sentence variation (penalizing structured technical papers)

- Embedding patterns: Detects word choice tendencies (biased against non-native English speakers)

This technological approach creates systemic vulnerabilities. The Stanford Human-Centered AI Institute confirms that no existing tool can definitively distinguish AI-generated content from human writing, particularly for:

High-Risk Writing Scenarios

Certain academic works face disproportionate false positive rates:

- Technical reports with standardized formatting

- Literature reviews citing common sources

- Non-native English submissions

- Concise answer formats (e.g., STEM problem sets)

Proactive Defense Strategies

Students facing accusations should:

- Request the specific evidence used for detection

- Provide draft versions and research notes

- Cite discipline-specific writing conventions

- Request human expert review

Institutions like MIT now recommend coupling AI detection with oral assessments when serious doubts exist. As detection technology evolves, maintaining fair evaluation processes remains paramount for protecting academic integrity without creating technological witch hunts.