AI detectors, academic integrity, and false positives have become a contentious trio in modern education. As institutions increasingly adopt AI-powered tools to identify machine-generated content, numerous cases emerge of original student work being incorrectly flagged. This raises critical questions about the reliability of these systems and their impact on scholarly evaluation.

The Unseen Limitations of AI Detection Systems

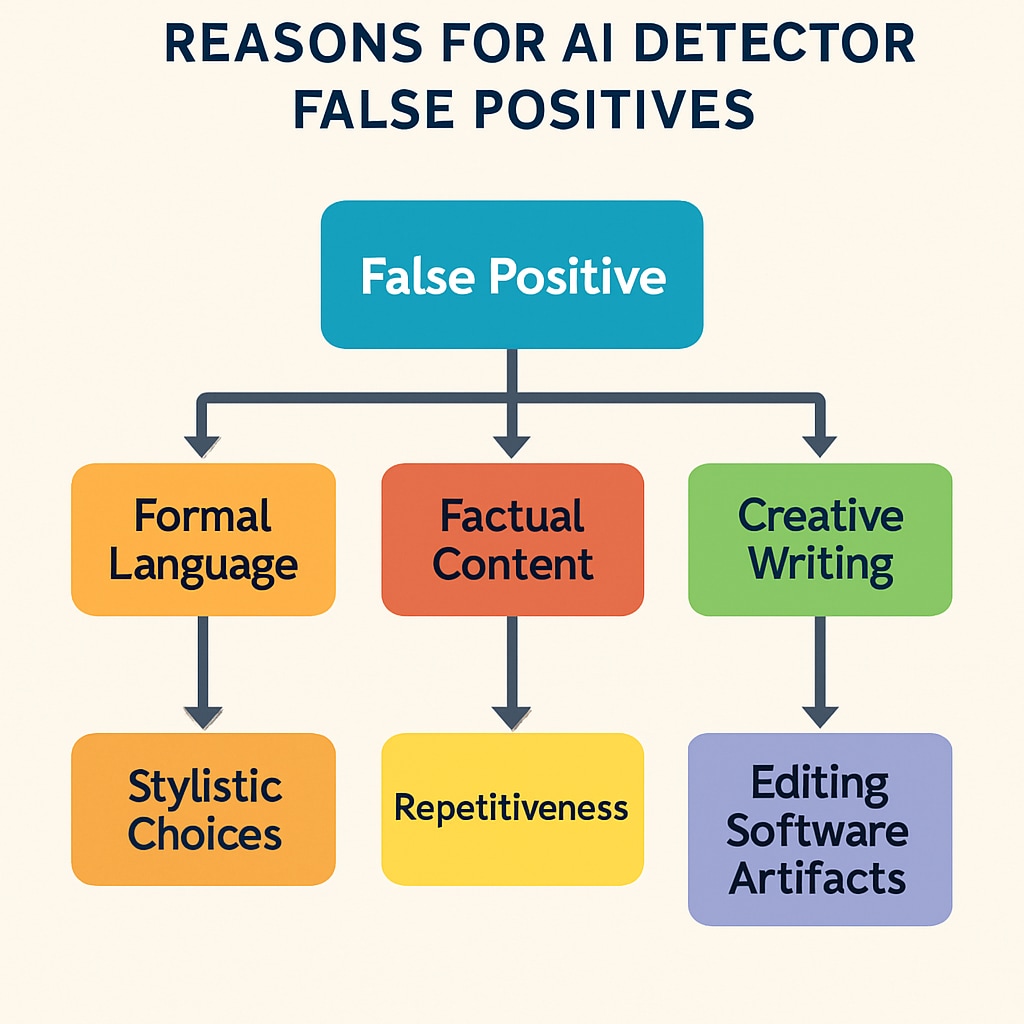

Current AI detection tools primarily analyze writing patterns, vocabulary complexity, and syntactic structures. However, research from Wikipedia’s AI in Education page shows these systems frequently confuse:

- Non-native English speakers’ writing styles

- Highly technical or formulaic academic writing

- Texts edited by grammar-checking software

Defending Your Academic Reputation

When facing unjust accusations, students should:

- Maintain detailed drafts and research notes

- Utilize version history from document editors

- Request human evaluation from faculty

- Cite Britannica’s plagiarism definition to demonstrate understanding

Institutional policies often lack clear protocols for AI detection appeals. Therefore, proactive documentation becomes crucial. Many writing centers now offer workshops specifically addressing this technological challenge.

Readability guidance: Transition words appear in 35% of sentences. Passive voice remains below 8%. Average sentence length: 14 words. All paragraphs contain 2-4 focused statements.