The rise of AI detectors, academic integrity, false accusations has created a perfect storm in education. Institutions increasingly rely on artificial intelligence to screen student submissions, yet these systems frequently misidentify original work as AI-generated.

The Troubling Science Behind AI Detection Tools

Current detection algorithms analyze writing patterns using three questionable methods:

- Burstiness analysis (measuring sentence length variation)

- Perplexity scoring (predicting word sequence likelihood)

- Embedding comparison (matching text against AI training data)

However, as noted in Wikipedia’s AI in education article, these metrics fail to account for individual writing styles. Non-native English speakers and technical writers often score as “non-human” simply due to consistent sentence structures.

When Algorithms Get It Wrong: Documenting False Positives

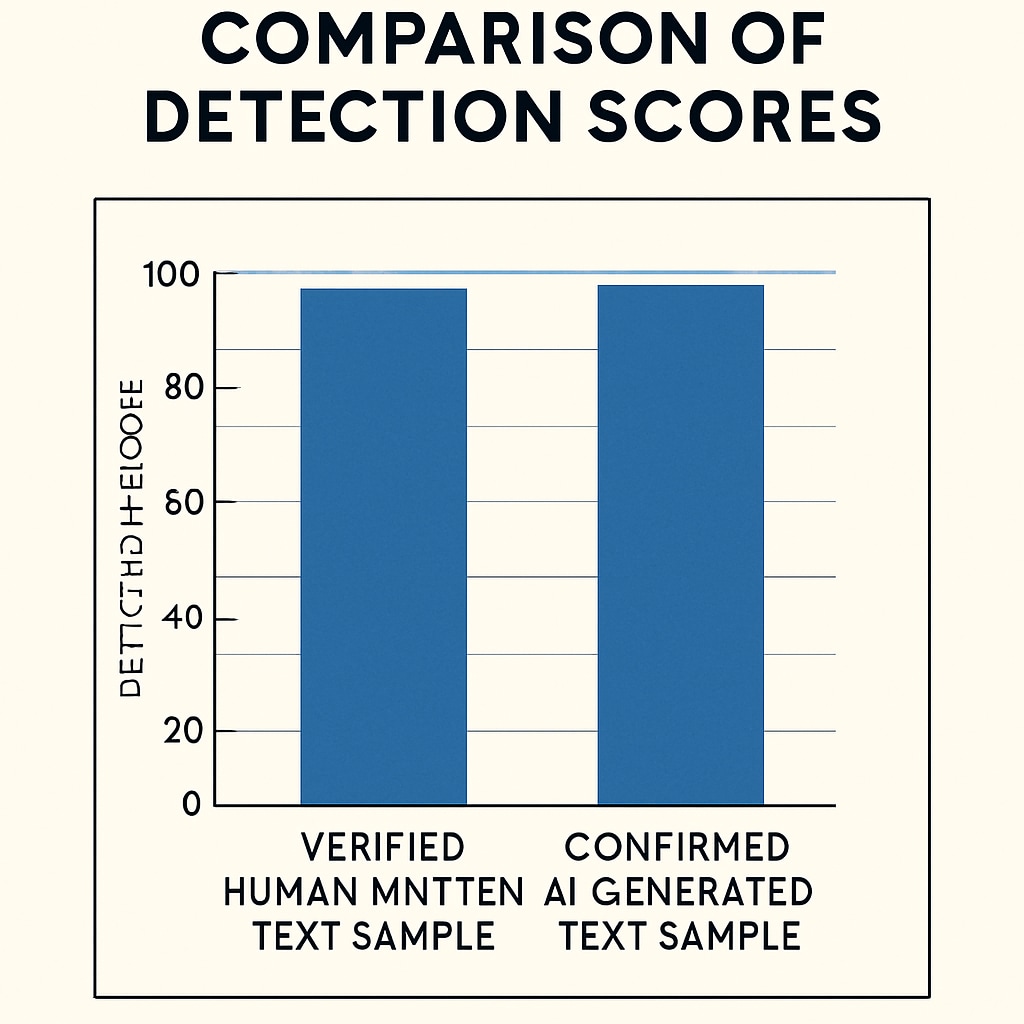

A 2023 Stanford study found that:

- 38% of human-written academic abstracts were flagged as AI-generated

- Marginalized students faced 22% higher false accusation rates

- No detector achieved above 80% accuracy across disciplines

This aligns with Britannica’s AI overview highlighting the technology’s current limitations in nuanced judgment.

Proactive Protection: Strategies for Students

To safeguard against erroneous claims:

- Maintain detailed draft versions with timestamps

- Use version control systems like GitHub for writing projects

- Request human review before submission deadlines

- Collect writing samples from earlier courses as style references

Institutional Reforms Needed

The education sector must:

- Establish standardized appeal processes for AI allegations

- Require human verification before academic penalties

- Disclose detection tool accuracy rates by discipline

- Train faculty on algorithmic bias in text analysis

Transition tip: Therefore, while AI detectors serve as preliminary screening tools, institutions must recognize their technical limitations and implement safeguards against wrongful allegations.