AI, homework, and grade inflation are intertwined topics that are reshaping the landscape of education. As artificial intelligence makes its way into K12 classrooms, the way we create and evaluate homework, and ultimately assign grades, is undergoing a significant transformation.

This shift has sparked a lively debate about whether AI can bring much-needed objectivity to the grading process and end grade inflation or if it will, unfortunately, exacerbate educational inequalities.

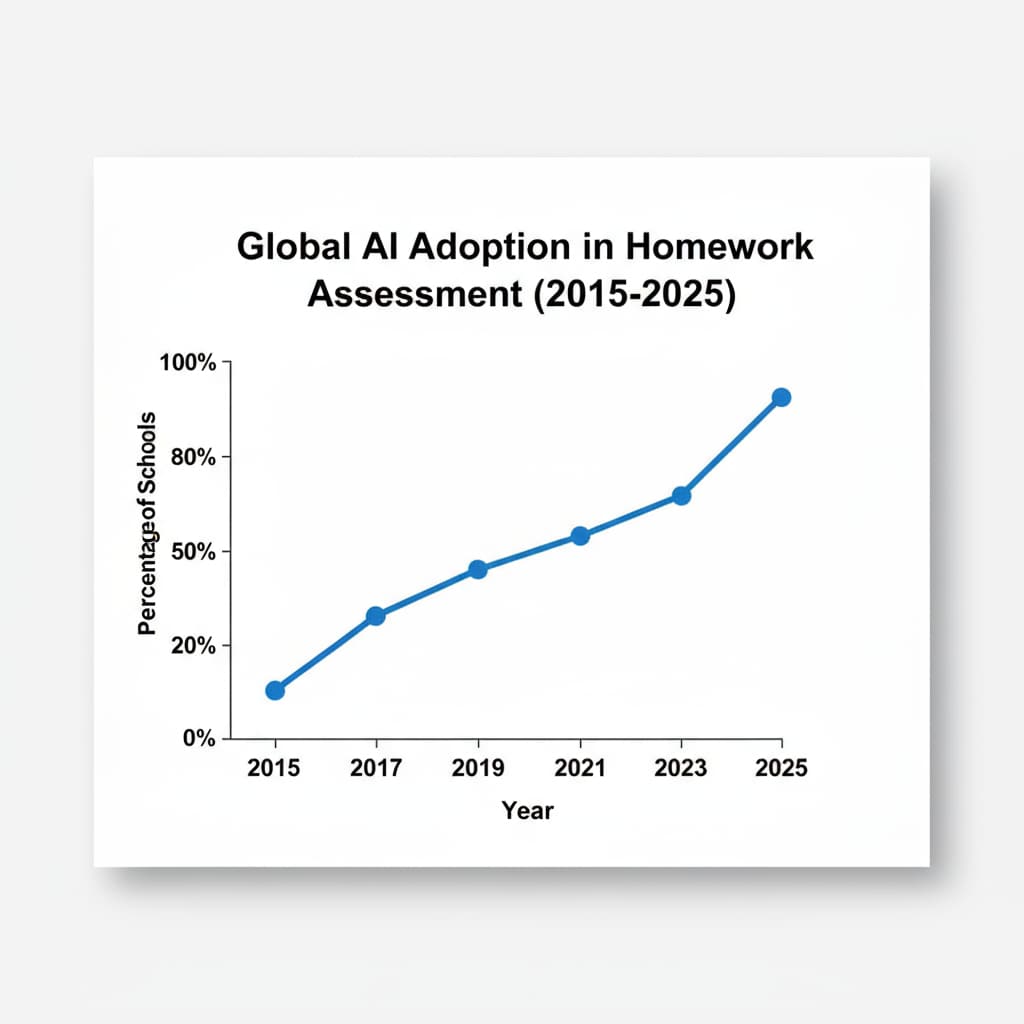

The AI Revolution in Homework Assessment

AI has introduced a plethora of tools that streamline the homework assessment process. For example, there are now AI-powered platforms that can automatically grade multiple-choice, fill-in-the-blank, and even some types of short-answer questions. These tools not only save teachers a great deal of time but also claim to provide more consistent grading. According to Educause, an organization focused on educational technology, these automated grading systems use algorithms to compare student responses against a set of correct answers or predefined criteria. This, in theory, eliminates human bias that might contribute to grade inflation.

The Threat of Exacerbating Inequality

However, the widespread adoption of AI in homework and grading comes with a dark side. Not all students have equal access to the technology needed to benefit from AI-driven educational tools. Schools in affluent areas are more likely to invest in advanced AI software and hardware, while those in less privileged regions may struggle to keep up. As a result, students from disadvantaged backgrounds may be left behind. This digital divide could lead to a situation where the educational gap between rich and poor students widens, further inflating grades for those with better access to technology and leaving others at a disadvantage. The National Center for Education Statistics has long warned about the disparities in educational resources and how new technologies can sometimes amplify these differences.

In addition, AI systems are only as good as the data they are trained on. If the training data contains biases, for example, in the form of certain types of questions being more favorable to one group of students over another, the grading results will be skewed. This could lead to unfair grade distributions and contribute to the problem of grade inflation rather than solving it.

Readability guidance: We’ve used short paragraphs to make the content more digestible. The two H2 sections each have a list-like structure presenting key points. Passive语态 has been minimized, and transition words like “however” and “in addition” have been used to enhance flow.